Leaking at the Prompt: How Many Professionals Are Entering Sensitive Info Into GPT?

by David Beníček

Generative AI tools like ChatGPT and Gemini are becoming everyday workplace companions, but not without risks.

A new Smallpdf survey of 1,000 U.S. professionals uncovered how often workers accidentally jeopardize sensitive company data when using AI at work. The results highlight widespread blind spots around AI security, showing just how vulnerable organizations can be when employees use these tools without proper training or policies.

At Smallpdf, we commissioned this research to better understand how professionals are handling sensitive information in an era of AI-driven work. With much of that information stored and shared through digital documents, our goal is to highlight the risks and help businesses protect their workflows.

These findings matter because they reveal how quickly AI adoption has outpaced workplace safeguards. As professionals rely on generative AI to speed up daily tasks, many risk exposing confidential client information, company documents, and even login credentials, potentially opening the door to costly data breaches and compliance violations.

Key Takeaways

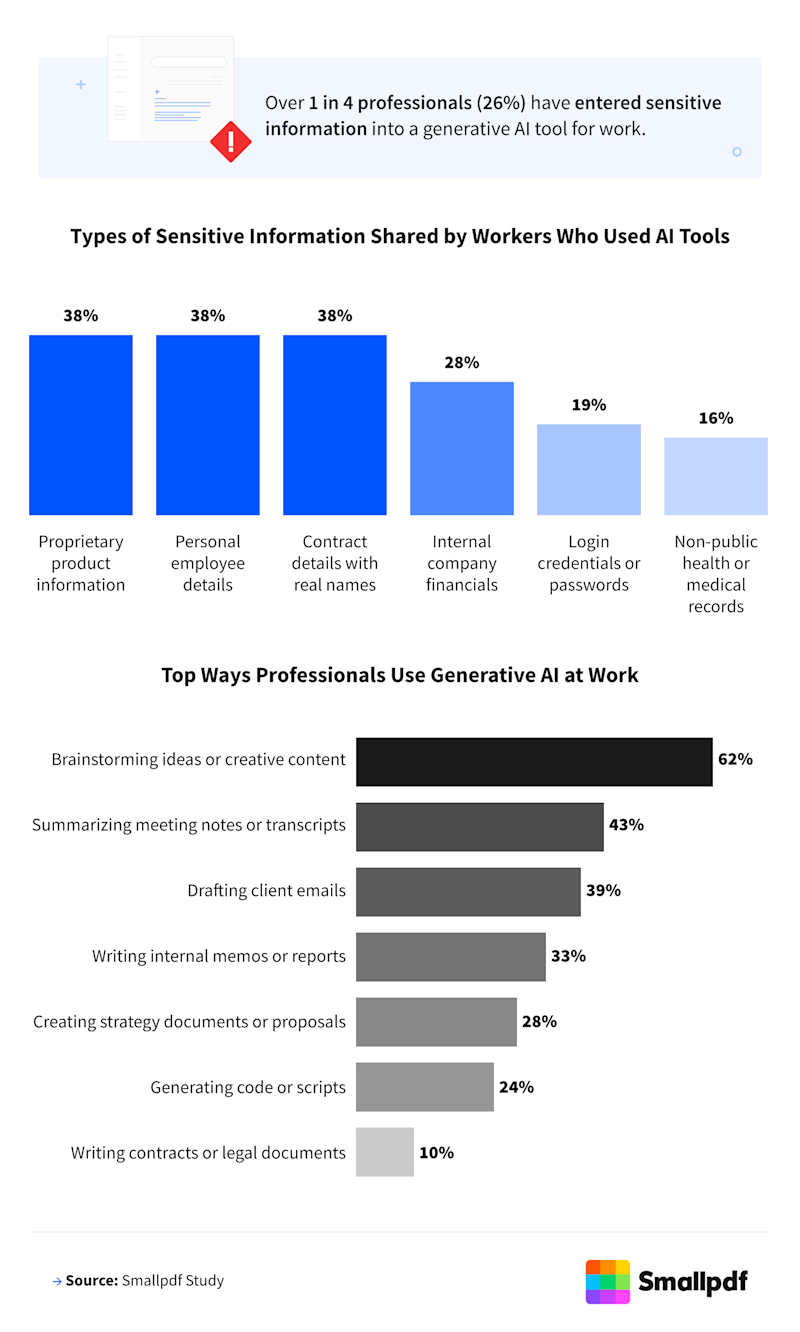

- Over 1 in 4 professionals (26%) have entered sensitive information into a generative AI tool for work.

- Nearly 1 in 5 professionals (18%) have entered real client or customer names into a generative AI tool for work.

- About 1 in 5 professionals (19%) have entered login credentials into a generative AI tool.

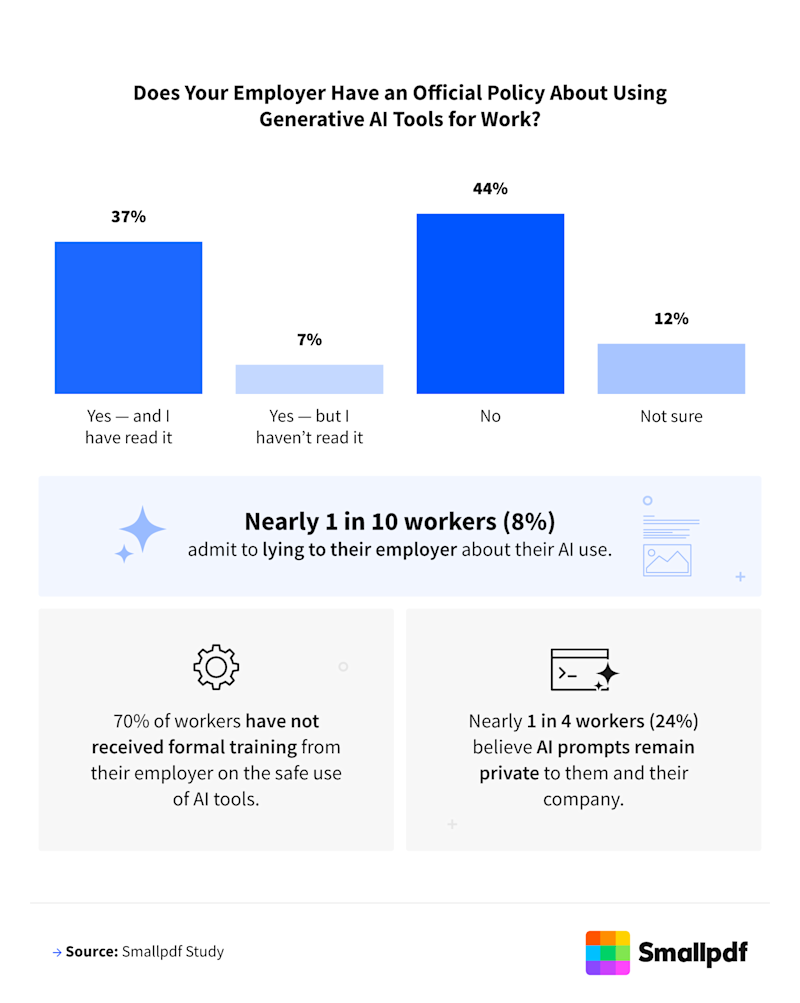

- Nearly 1 in 10 workers (8%) admit to lying to their employer about their AI use.

Who Uses AI Most and What They're Sharing

AI use isn't limited to tech teams, as professionals across industries and job levels rely on these tools daily. But the data shows that many don't think twice before sharing sensitive details.

Infographic showing types of sensitive data professionals have shared with generative AI and common workplace uses.

- Over 3 in 4 Americans (78%) use generative AI tools for work at least weekly.

- Over 1 in 4 professionals (26%) have entered sensitive information into a generative AI tool for work. Nearly 1 in 5 professionals (18%) have entered real client or customer names.

- Nearly 1 in 5 professionals (17%) do not remove or anonymize sensitive details before entering them into AI tools.

Top 5 Industries Using AI Weekly or More

- Tech

- Education

- Healthcare

- Finance

- Manufacturing

Percentage of Each Job Level Using AI Several Times a Week or More

- Senior manager – 90%

- Mid-level manager – 85%

- First-level manager – 81%

- Executive and senior leadership – 81%

- Mid-level employee – 75%

- Entry-level employee – 67%

Departments Most Likely to Use Generative AI Weekly or More Often

- Marketing, advertising, or communications – 86%

- IT or software development – 86%

- HR or people operations – 85%

- Education or training – 83%

- Sales or business development – 81%

Top 3 Generative AI Tools Used Most Often for Work

- ChatGPT (OpenAI) – 68%

- Copilot (Microsoft) – 12%

- Gemini (Google) – 12%

Percentage of Users Who Enter Work-Related Prompts Without Removing Sensitive Details

- Gemini (Google) — 23%

- Claude (Anthropic) — 23%

- ChatGPT (OpenAI) — 17%

- Copilot (Microsoft) — 10%

- Perplexity — 8%

1 in 5 Professionals Have Entered Login Credentials Into a Generative AI Tool

Among those who have, here's what they've entered:

- Personal email – 47%

- Work email – 43%

- Social media account – 27%

- Cloud storage (e.g., Google Drive, Dropbox) – 25%

- Company software tools (e.g., Salesforce, Jira, Slack) – 22%

- Bank or financial account – 18%

- Internal company portal – 15%

Blind Spots in AI Security

Even as AI adoption surges, many employees underestimate how their prompts can put company data at risk. Our findings reveal the gaps in training, oversight, and awareness that leave businesses exposed.

Infographic on company AI policies, employee training gaps, and how workers are using or misusing generative AI at work.

- Nearly 1 in 10 workers (8%) admit to lying to their employer about their AI use.

- 1 in 10 professionals have little to no confidence in their ability to use AI tools without violating company policy or risking data security.

- 1 in 20 professionals (5%) have received a warning or disciplinary action for how they used AI at work.

- Nearly 1 in 10 professionals (7%) admit to using ChatGPT for a work task even after being told not to.

- Over 1 in 5 professionals (20%) believe generative AI tools do not store every prompt they enter.

- 3 in 4 professionals (75%) would still use AI tools for work even if they knew every prompt was permanently stored.

According to the "Cost of a Data Breach 2025" report by IBM and Ponemon Institute, organizations that rush to adopt AI without robust security and governance experience significantly higher breach rates and costs compared to those with proper controls. The report makes it clear that ungoverned AI systems increase vulnerability, especially when employees lack training and oversight.

What's at Stake When Sensitive Data Leaks Into AI

Once data is entered into a platform, it may be stored, shared, or even exposed in ways that professionals cannot control. Leaked client information can result in privacy breaches and erode trust. Entering login credentials risks fraud and unauthorized access to critical business systems, from cloud storage to financial accounts. Sharing internal documents through unsecured AI tools could expose intellectual property, leaving companies vulnerable to competitors or malicious actors.

According to the Cost of a Data Breach 2025 report by IBM and the Ponemon Institute, organizations without strong governance and security around AI experience significantly higher breach rates and costs. These consequences range from regulatory fines and compliance violations to reputational damage and long-term loss of client confidence.

For businesses, the stakes are clear: what feels like a small shortcut in an AI prompt can quickly turn into a costly, wide-reaching problem.

How Professionals Can Protect Sensitive Data When Using AI at Work

The risks are real, but so are the solutions. By building safer habits, employees can take advantage of AI's efficiency without exposing their companies to unnecessary risk. These steps are simple but go a long way in preventing costly mistakes:

- Avoid entering sensitive information: Never share client names, financial data, or login credentials in AI prompts.

- Anonymize inputs: Remove or redact any identifying details before using AI tools.

- Follow company policy: Use only AI platforms approved by IT and stay aligned with internal data security rules.

- Pair AI with secure document workflows: Tools like Smallpdf help professionals manage, protect, and share documents safely alongside AI use.

- Keep learning: Take part in training and stay updated on new security best practices.

Staying productive with AI does not have to mean sacrificing security. With awareness and consistent habits, professionals can unlock the benefits of AI while protecting both their work and their organizations.

Methodology

This report is based on original, first-hand research commissioned by Smallpdf. It is designed to understand how professionals are using generative AI in their daily work and what risks come with it. We surveyed 1,000 full-time U.S. employees across industries, job levels, and demographics, ensuring a representative sample of the modern workforce.

The survey covered professionals ranging from entry-level staff to senior executives. Respondents spanned sectors including technology, education, healthcare, finance, and manufacturing. Generationally, 26% were baby boomers and Gen X combined, 56% were millennials, and 18% were Gen Z, reflecting how AI adoption cuts across age groups. The average respondent age was 41. Gender distribution included 41% female, 58% male, and 1% non-binary, underscoring diversity within the sample.

About Smallpdf

Smallpdf helps millions of professionals and businesses simplify digital document management with secure, easy-to-use tools. From protecting sensitive files to streamlining workflows, Smallpdf makes working with PDFs simple. Popular tools include Compress PDF to reduce file size, Merge PDF to combine documents, and PDF to Word to quickly convert files for editing. With Smallpdf, you can manage documents smarter and more efficiently in today's AI-driven workplaces.

Fair Use Statement

The information in this article may be used for noncommercial purposes only. If you share it, please provide a link with proper attribution to Smallpdf.